After I taught the Multicore Programming course at Vrije Universiteit Brussel last year, I asked my students to fill in a survey, including questions about their use of (generative) AI tools. Below are the results.

Q1: Which AI tools?

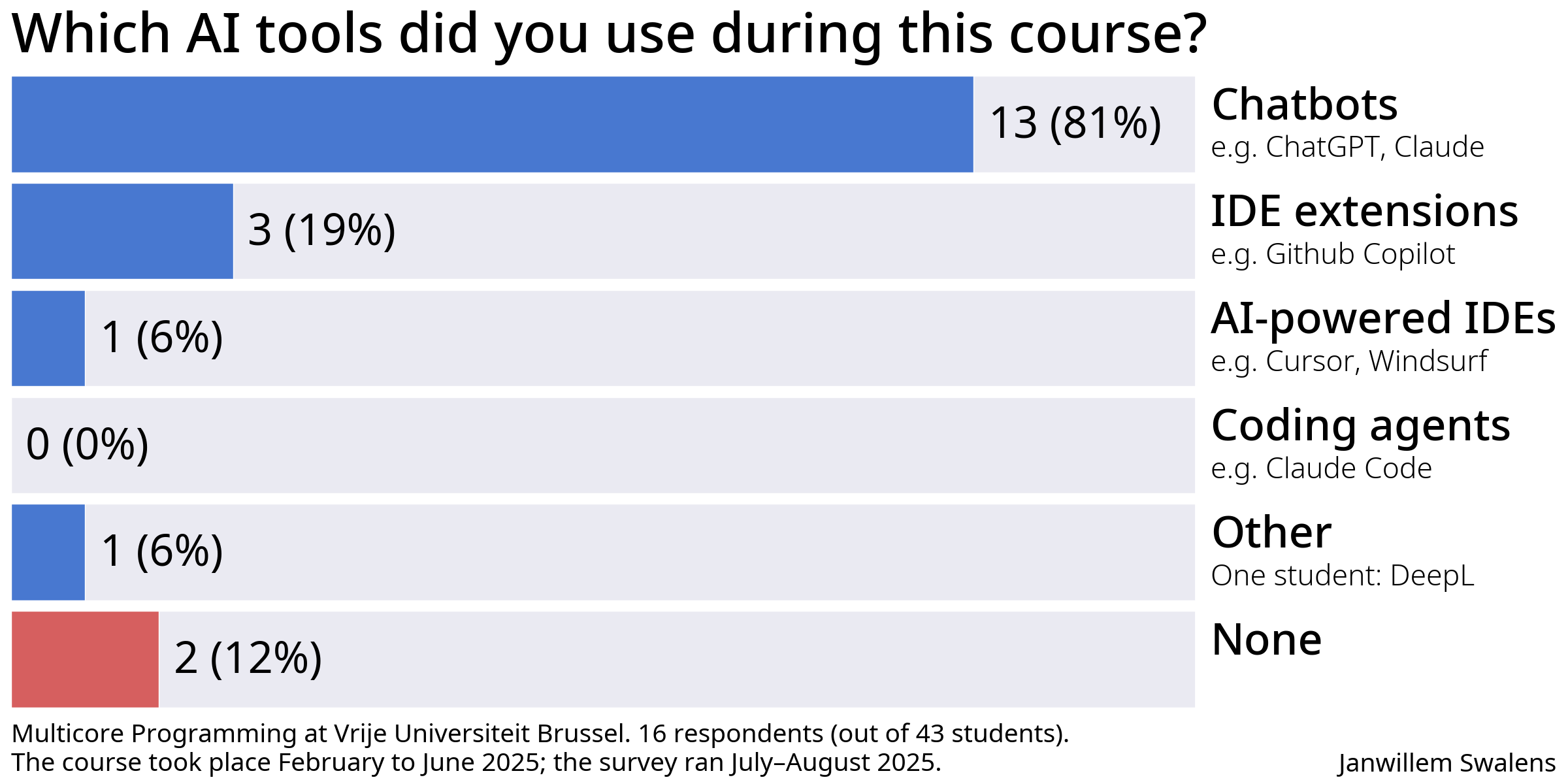

Out of 16 respondents, 14 students (88%) indicated using AI tools during the course; only 2 did not. Most (13 students, 81%) used chatbots. Additionally, 3 used IDE extensions such as GitHub Copilot and 1 used an AI-powered IDE like Cursor. (They could select multiple options.)

No one reported using a coding agent. This is probably due to their recency: Claude Code was released only in February 2025 (and is paid) and Gemini CLI in June (for free); while students worked on their projects between March and June 2025.

We can conclude AI is commonplace, especially chatbots. Newer tools like coding agents are still unpopular. It’ll be interesting to see how this evolves in the next few years.

Q2: For which tasks?

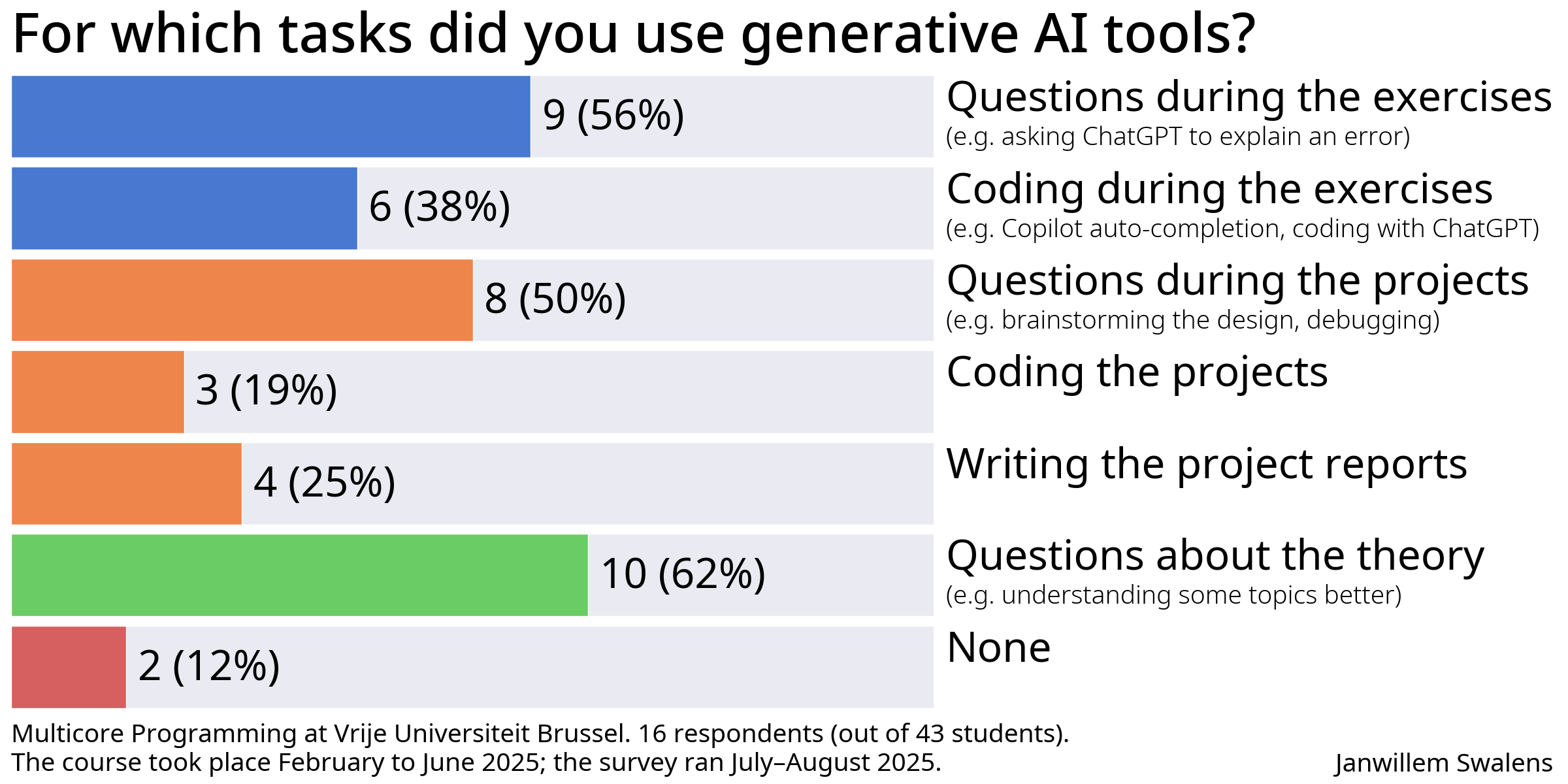

Students reported using AI tools for a variety of tasks:

- 9 students (56%) asked ChatGPT questions during exercise sessions. I also observed this in class. This is honestly a bizarre experience as a teacher: students use ChatGPT even though I’m right there. Some students seem to prefer asking their questions to a chatbot over a human being (fear of being judged?). Overall, my experience is that AI chatbots are remarkably effective at spotting and explaining mistakes, and can be very conducive to the learning process.

- 6 students (38%) used AI to code during the exercises. I saw several students with GitHub Copilot enabled in their IDE. This is something we’ll have to figure out: if the goal of an exercise is to practice, should you use a tool that gives you the answer immediately? Students may simply have the Copilot extension enabled by default in their VSCode, so we’ll need to explicitly ask them to disable it.

- 8 students (50%) used AI for questions during their work on programming projects, 3 (19%) used it for coding projects, and 4 (25%) for writing reports. My view on this is nuanced: using AI as a companion for brainstorming, debugging, or gaining a deeper understanding can be very educational. However, delegating core parts of the projects to AI means you are no longer learning. Similarly, using AI to improve or spell-check reports is fine, but of course students should remain in control of and responsible for what they write.

- Finally, a surprising 10 students (62%) asked chatbots to further explain concepts from theory lectures. This seems like an excellent use of AI, although I wonder how high the risk of hallucinations is. (And, it makes me wonder what I should improve!)

In an open question on their experience with AI, many students said it gave them a productivity boost, e.g. for debugging, generating test code and data, writing repetitive code. They were also well aware of its limitations: hallucinations, inconsistencies, and a need to guide the AI. Some mentioned that it had replaced Google or Stack Overflow for them. They indicated it helped them to understand some topics better, but they also emphasized that it remains important to understand and master the material yourself.

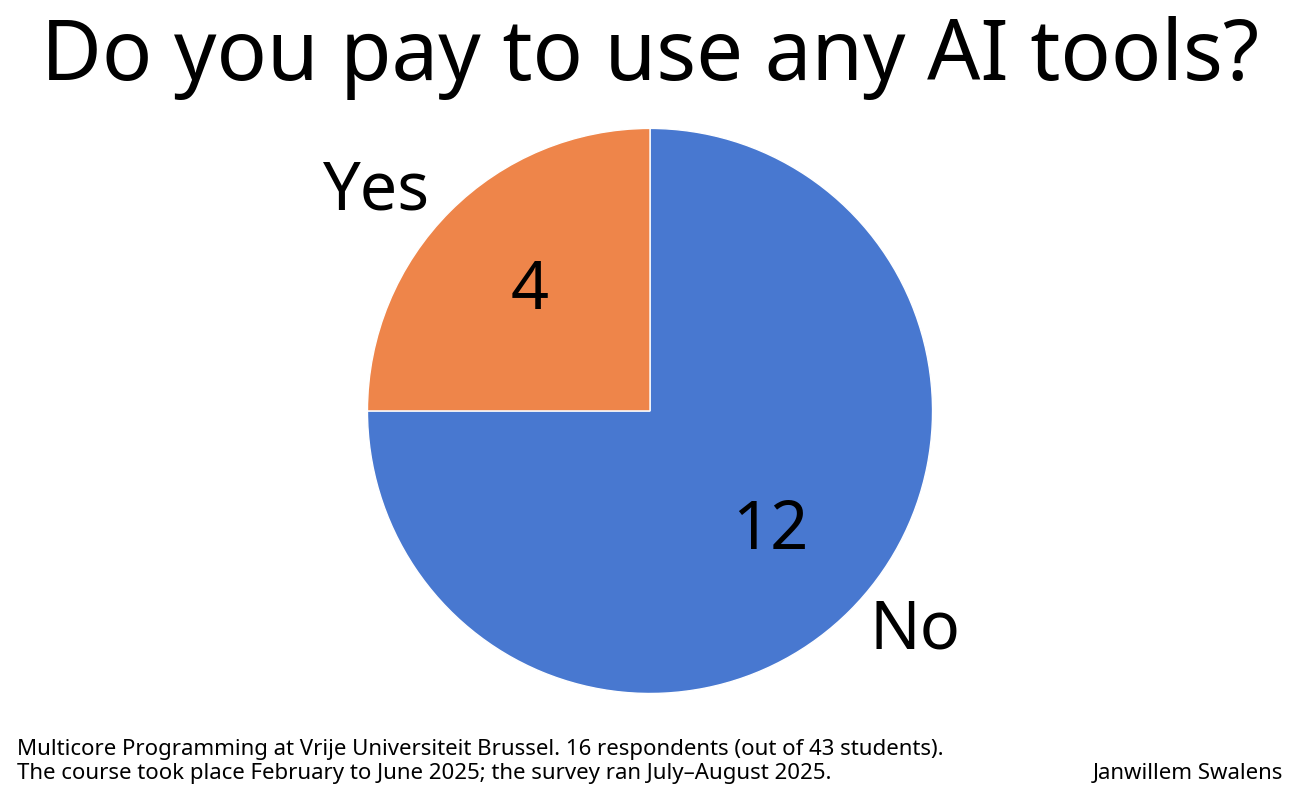

Q3: Payment

4 students (25%) said they paid for AI tools. This is concerning: we don’t want a situation where students who can afford (better) AI tools end up getting a better education or higher grades. This is certainly something to pay attention to in the future.

Policy on AI use

Last year, we didn’t yet have a specific policy on the use of AI tools; only our standard policy on plagiarism and an oral defense that checks students’ work and understanding. AI tools were in a gray zone. Next year, we’ll introduce a clear policy, but this will be a tricky balancing act. Student opinions varied widely (paraphrased):

“It should be prohibited, otherwise we don’t learn.”

“It should be allowed only for reports, not for code.”

“It should be allowed, because in the workplace it will also be there.”

“It’s impossible to detect, so there’s no point in prohibiting it anyway.”

Remarks and disclaimers

Out of 43 students registered for the course, only 16 completed the survey. In my experience, it’s usually the more ‘motivated’ students who fill in surveys, which skews results. In the open questions, many provided long and thoughtful answers, suggesting that this topic is on their minds as well. The survey was labelled as anonymous to encorage honest answers. Finally, this is an elective Master’s course, with students who already know how to program well. For a first-year Bachelor course, my policy would be different than for an advanced course in the Master.